AI Is Changing Our Lives

May 1, 2023

Artificial intelligence, known as AI, is the science and engineering principles that seek to create intelligent machines that can perform actions that were previously only performed by humans and other intelligent animals. This has led to the creation of software recently that generates words or images based on user-provided prompts.

Throughout this article, there will be small hints of AI to see show readers the software in action — although most of it will be written by humans.

One of the risks of AI is that it can be used to create deepfakes. Deepfakes are videos or images that have been manipulated to make it look like someone is saying or doing something they never actually said or did. Deepfakes can be used to spread misinformation, damage someone’s reputation or even commit fraud.

Another risk of AI is that it can be used to automate jobs. As AI becomes more sophisticated, it is becoming possible to automate more and more tasks that were once done by humans. This could lead to job losses, as well as a decrease in the demand for human labor.

There are also many potential benefits to using AI. AI can be used to improve efficiency, productivity and accuracy. It can also be used to create new products and services, and to solve problems that were once thought to be impossible.

Interestingly, AI has been all over the news lately. Some people happen to stumble upon it, while others merely avoid it due to the ominous news reports. It is important to weigh the risks and benefits of AI before deciding whether or not to use it. In some cases, the benefits of using AI may outweigh the risks. In other cases, the risks may outweigh the benefits.

You may be surrounded by AI if you have an Amazon Alexa device, or if you have asked your Apple or Android a question. The AI most prevalent in the sphere of academia is the AI chatbot ChatGPT.

Even though the program simply generates text, The Guardian’s Alex Hern reports, “With fears in academia growing about a new AI chatbot that can write convincing essays – even if some facts it uses aren’t strictly true – the Silicon Valley firm behind a chatbot released last month is racing to ‘fingerprint’ its output to head off a wave of ‘AIgiarism’ – or AI-assisted plagiarism.” Certain programs like GPTZero can help detect AI plagiarism.

AI cannot, however, be creative. In a TED Talk, brain scientist Henning Beck distinguished the difference between deep learning and brain learning, using the example of a chair. Deep learning, or AI, would say that a chair is an object with four legs and a backrest.

However, when Beck asked the audience which objects they thought were chairs in a series of PowerPoint slides, nearly all participants agreed that all the objects could be classified as chairs.

AI does this through a process called deep learning. Humans do not do this. In the given example, a human could describe a chair as a movable item you can sit on. Based on the presentation, humans appear to be more capable than computers based on how they learn.

In addition to being able to create realistic images, the AI can also perform tasks like reading a screen, opening a document, typing out notes or typing up emails. These are all things that humans use to perform the same task. The process that AI uses to learn to do these tasks is known as the neural network. Neural networks contain billions of neurons that act in groups. This comes from the human brain cell called a neuron, which is used to transmit information.

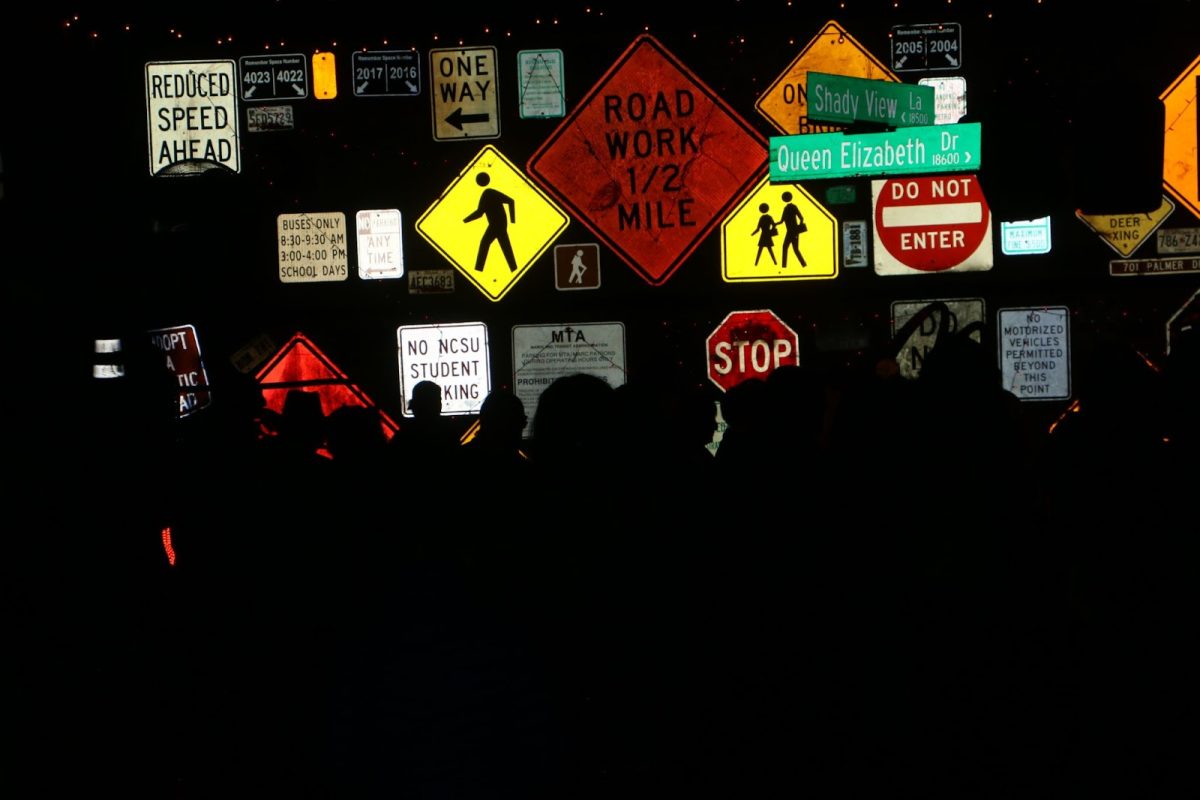

One of the most controversial aspects of artificial intelligence is deepfakes. The Merriam-Webster dictionary defines a deepfake as “a video of a person in which their face or body has been digitally altered so that they appear to be someone else, typically used maliciously or to spread false information.” However, deepfakes are not just videos. They can be photos, audio or poems. DALL-E is one of the largest systems responsible for deepfakes, along with Chat GPT.

It is not hard to imagine how some companies would have the resources to make thousands of copies of a fake face or body. “The more insidious impact of deepfakes, along with other synthetic media and fake news, is to create a zero-trust society, where people cannot, or no longer bother to, distinguish truth from falsehood. And when trust is eroded, it is easier to raise doubts about specific events,” The Guardian’s Ian Sample notes.

What is your opinion on AI? Could you tell which sections of this article the AI covered? One of the AI bots used marked this as a book and not a newspaper, and part of the article’s introduction section is edited by AI. The AI used were Google Bard and Dreamily.